Troubleshooting a UDP Packet Loss Issue on Linux

introduce

A few years ago, while working on a QUIC-related project, I encountered a very peculiar case of UDP packet loss in a Linux environment. It took me several days to pinpoint the issue, so I’ve decided to document and summarize it.

definition of terms

QUIC: A UDP-Based Multiplexed and Secure Transport A UDP-Based Multiplexed and Secure Transport

Connection: A QUIC connection is shared state between a client and a server.

Connection ID: A connection identifiers of Connection

Connection Migration: The use of a connection ID allows connections to survive changes to endpoint addresses (IP address and port), such as those caused by an endpoint migrating to a new network.

QUIC client: My QUIC client

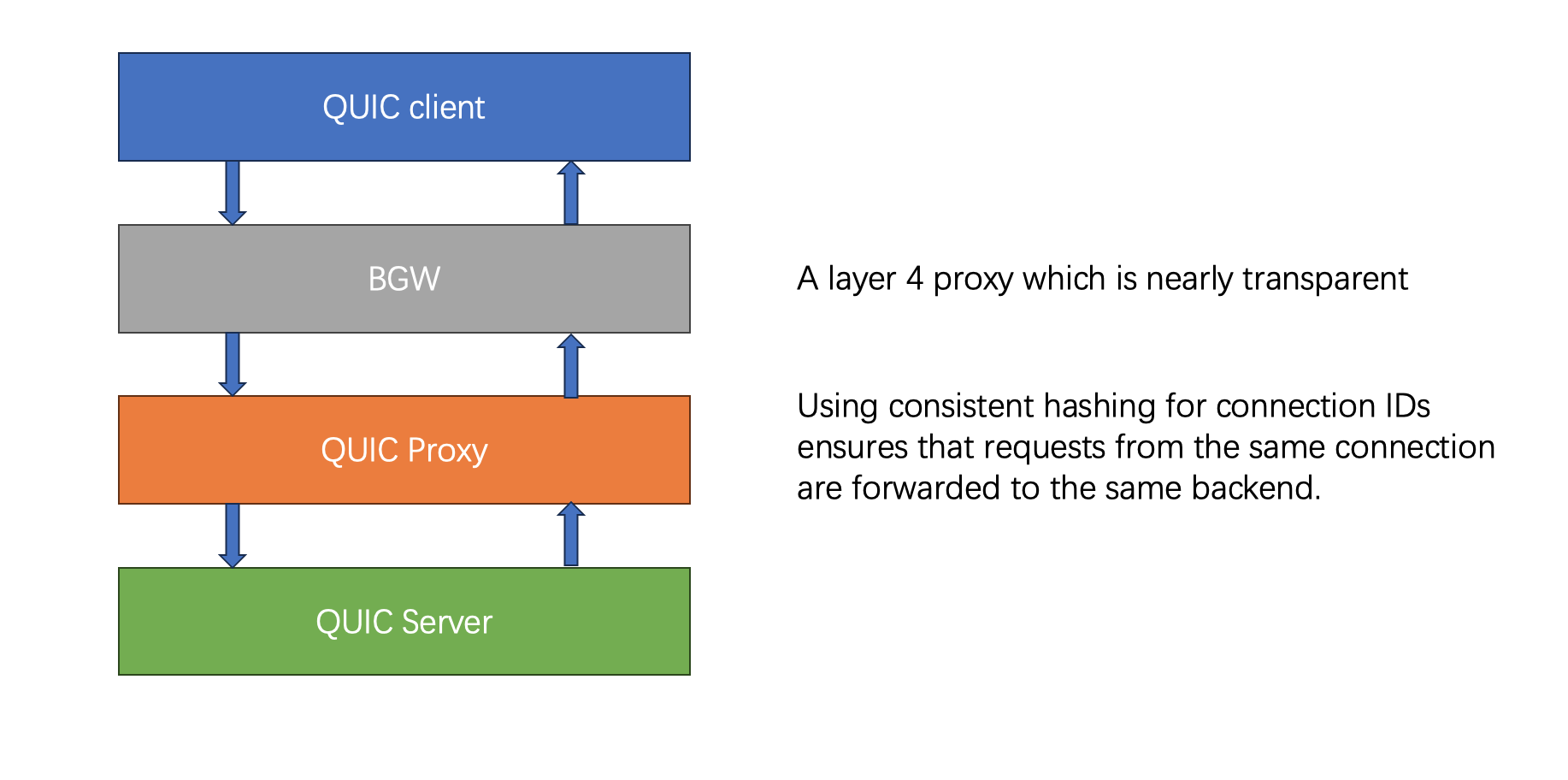

BGW: My conpany’s layer 4 gateway, a proxy, transparent to user

proxy Server: a proxy to forward up and down packet

QUIC Server: My Quic Server to handle QUIC connections.

quic-go: A QUIC implementation in pure Go

back ground

The architecture, as depicted in the diagram, is relatively simple.

The issue arises when the number of QUIC connections exceeds a certain limit (typically around 150,000) and persists for some time. This leads to sporadic packet loss, exacerbated by QUIC’s retransmission mechanism, which can escalate into a cascade effect.

During our project, when QUIC was still a draft protocol, we made modifications to it. For instance, due to QUIC’s large handshake packets, our business server had to send certificates during the handshake. We experimented with using the BGW Server to handle the first phase of the handshake and certificate delivery, which led to issues like sequence numbers. However, these details are not pertinent to this article. Looking back, such changes were not ideal for seamless business operations.

proxy server Connection ID facilitates Connection Migration, allowing the same connection to be identified even if the client’s IP and port change. In a typical setup with multiple backend servers, for successful migration, the proxy server ensures that despite changes in client IP and port, packets with the same Connection ID are consistently forwarded to the same target machine. This proxy server, implemented in C++ using epoll and operating as a ***single-threaded *** program, employs consistent hashing based on Connection ID to forward UDP packets.

problem detect

When the number of connections is low, the connections remain stable. However, during stress testing with a higher number of connections, they start disconnecting after a while, with the primary reason being timeouts. To pinpoint the cause of these disconnections, I conducted the following investigations.

Packet Loss Investigation

Monitoring Key Metrics

- Observed CPU, memory, disk I/O, and network I/O across all stages.

- None of these metrics hit performance bottlenecks, but network I/O spiked when connections began to drop and stabilized when only a few connections remained.

- Preliminary conclusion: possible network packet loss and QUIC retransmissions.

Packet Analysis with tcpdump

- Attempted to analyze packet loss and retransmissions.

- Packet capturing is feasible with fewer connections and lower traffic.

- With over 100,000 long connections, capturing and analyzing packets is inefficient due to high data rates. Hence, this approach was temporarily abandoned.

Intermediate Step Elimination

- BGW (a core company infrastructure component) was unlikely to be the issue but not ruled out entirely.

- Established two alternate paths:

- QUIC Client -> QUIC Server

- QUIC Client -> QUIC Proxy -> QUIC Server

- The first path showed no issues, while the second path had problems, indicating a potential issue with the QUIC proxy.

- Identified possible scenarios:

- QUIC client failed to send packets, lost during transmission.

- QUIC client sent packets, but QUIC proxy didn’t receive them.

- QUIC proxy received packets but didn’t forward them.

- QUIC proxy forwarded packets, but QUIC server didn’t receive them.

Log Sampling and Analysis

- To identify the problematic stage, I added logging throughout the entire path.

- Logging for all connections caused disk I/O bottlenecks, so I implemented sampling (logging for connections where Connection ID % 10000 == 1).

- Debug log analysis revealed that the QUIC client sent packets, but the QUIC proxy didn’t receive them.

- Preliminary conclusion: two potential causes:

- QUIC client sent packets, but they were lost during transmission.

- Packets were lost during reception by the QUIC proxy (focus area for further investigation).

- Reviewing Monitoring Details

- Upon revisiting the monitoring data, I noticed a new detail: the network IN on the QUIC proxy machine was significantly higher than the network OUT.

- This suggests that data packets are reaching the machine where the proxy is located, but the proxy application is not receiving them.

Detailed Cause Identification

To further investigate why the QUIC proxy application is not receiving the packets, I conducted the following steps:

1 | +----------------+ +----------------+ |

The sending process wasn’t analyzed because it’s similar to receiving but in reverse, and packet loss is less likely, occurring only when the application’s send rate exceeds the kernel and network card’s processing rate.

Check for Packet Loss at the NIC Level

- Result: No packet loss detected.

- Command Used: ethtool -S / ifconfig

Check for UDP Protocol Packet Loss

- Result: Packet receive errors are rapidly increasing at a rate of 10k per second, and RcvbufErrors are also increasing. The rate of increase for packet receive errors is much higher than for RcvbufErrors.

Check UDP Buffer Size

- Current Sizes: System UDP buffer size is 256k, application UDP buffer size is 2M.

- Adjustment: Even after increasing the system UDP buffer size to 25M, RcvbufErrors continue to grow.

- Conclusion: The main cause of packet loss appears to be packet receive errors.

Check Firewall Status

- Result: Firewall is disabled.

Check Application Load

- Result: CPU, memory, and disk I/O loads are all low.

Check Application Processing Logic

- Result: The application uses single-threaded synchronous processing with simple logic to forward packets.

- Potential Issue: The simplicity and single-threaded nature of the processing logic might be the source of the problem.

At this point, it can be preliminarily determined that the proxy’s processing capability is insufficient. After carefully reviewing the proxy’s code, it was found that the proxy uses a single-threaded epoll event loop to receive and synchronously forward packets.

With additional logging and statistics, it was observed that the processing time for each packet is approximately 20-50 microseconds. Therefore, the single-threaded synchronous processing can handle 20,000 to 50,000 packets per second. If the number of packets exceeds this processing capacity, packets are likely to be dropped.

verification

The optimal solution would be to refactor the proxy logic to use multi-threading and asynchronous processing. This can significantly increase the proxy’s throughput, but it requires substantial changes to the code logic. Therefore, I initially started multiple processes simply to quickly validate by listening on multiple ports, and found that the QUIC server can reliably maintain hundreds of thousands of connections.