Group Chat vs. Live Stream Messaging: A Comparison

Introduction

In today’s digital world, real-time messaging is crucial for communication. Two popular ways of messaging are group chats and live stream messages. Both serve to connect people instantly but in different contexts and styles. This article will explore the technical aspects of these two systems, highlighting their similarities and differences.

Basic Definitions and Use Cases

Group Chats:

Group chats are online spaces where multiple people can communicate in real-time. These are often used for team collaboration, social interactions, or family discussions. In group chats, messages are stored, and participants can review the conversation history at any time. Apps like Slack, Discord, and WhatsApp are common examples.

Use Cases for Group Chats:

Team Collaboration: Team members discuss projects, share files, and make decisions together.

Social Groups: Friends and family members stay in touch, share updates, and plan events.

Communities: People with shared interests engage in discussions, exchange ideas, and offer support.

Live Stream Messages:

Live stream messages are real-time comments or interactions that happen during a live video broadcast. These messages appear instantly on the screen, allowing the audience to interact with the streamer and each other. Platforms like Twitch, YouTube Live, and Facebook Live provide these features.

Use Cases for Live Stream Messages:

Audience Interaction: Viewers ask questions, share thoughts, and react to the content in real-time.

Events and Webinars: Participants engage with speakers, participate in polls, and join Q&A sessions.

Gaming Streams: Gamers interact with their audience, get live feedback, and build a community around their content.

This comparison will delve deeper into the architecture, performance, features, and security aspects of group chats and live stream messages, helping you understand their unique characteristics and applications.

basic comparsion

| group | Live Stream Messages | |

|---|---|---|

| Participants | 1k level | 1M level |

| Relationship Chain | Present | Absent |

| Member change | Low | High |

| Offline Messages | focus | Not focused |

| Last time | long | short |

| Security | end-to-end encryption(option) | No encryption |

Based on the above table, there are two main issues for live stream messaging:

User Maintenance:

- Tens of thousands of users join and leave the live stream room every second.

- Single live stream can have millions of users online simultaneously.

- Cumulative total of tens of millions of users entering the live stream room.

Message Delivery:

- With millions of users online, there is a large volume of incoming and outgoing messages.

- Ensuring the reliable delivery of messages, such as gifts and co-streaming requests.

Since the first issue is not difficult to solve, the following discussion will focus mainly on the second issue.

Architecture and Infrastructure

Group Chat

Typical architecture

Message Diffusion

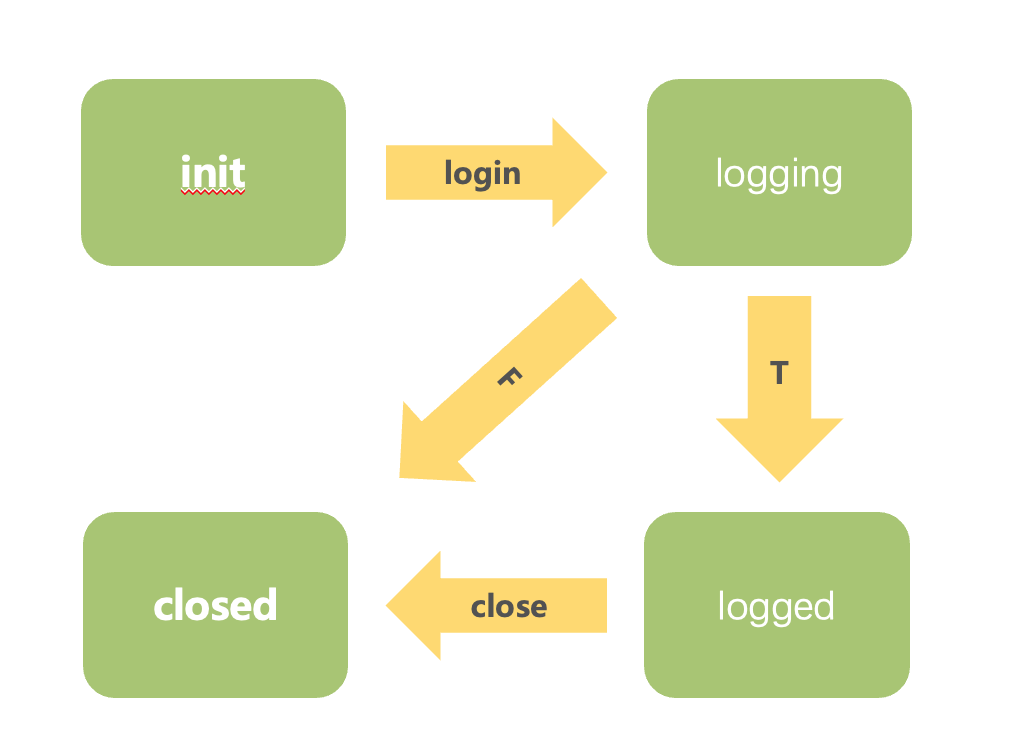

In group chats, there are generally two modes: read diffusion and write diffusion.

Read Diffusion: The group message is stored only once, and all users in the group pull the message from the group’s public mailbox.

Write Diffusion: When a message is sent, it is dispathced into each person’s mailbox.

However, strictly speaking, even for read Diffusion, the message can be stored only once, but each user’s fetch_msgid, ack_msgid, read_msgid, begin_msgid, etc., are different. Therefore, a process of dispatching messages for write spread is inevitable. Thus, this article will directly discuss the write Diffusion model.

The group experiences two types of diffusion: 1 group -> m users and 1 user -> n devices.

Where m depends on the number of group members, and n depends on the number of devices per user. Typically, m does not exceed 1K, and n does not exceed 5.

If 1000 groups are sending messages simultaneously, the split scale is approximately 100 million. As this scale continues to increase, the splitting process puts enormous pressure on the entire system, leading to greater resource consumption.

Message storage and retrieval.

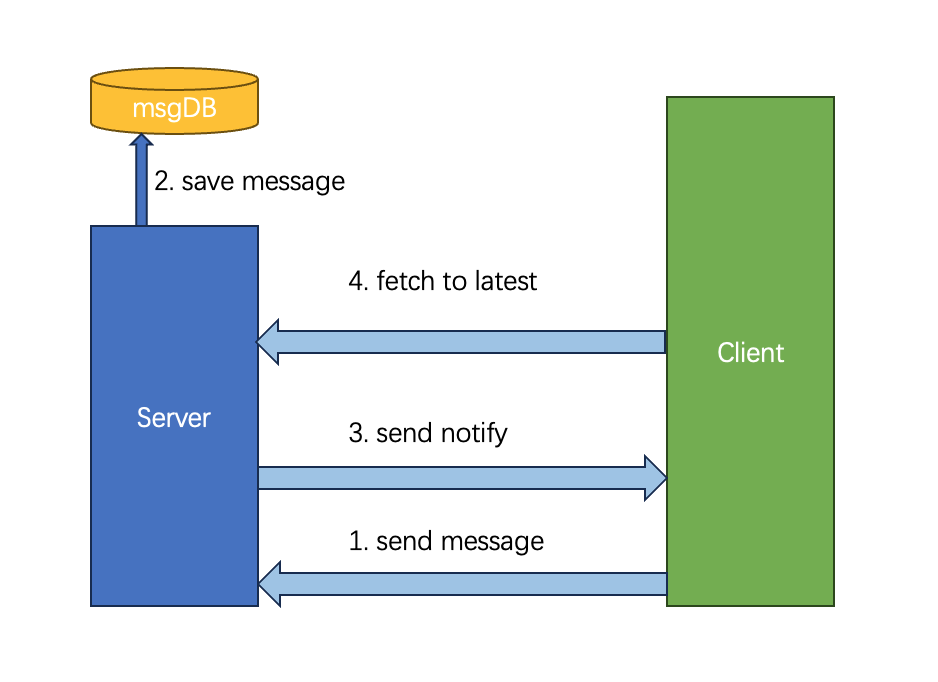

For group chat messages, a combination of push and pull methods is usually used to prevent message loss. If the server directly pushes messages to the client and the notification is lost, the message might also be lost. Therefore, the common approach is to use a combination of push and pull. When the server receives a message, it first stores the message and then notifies the client that a new message has arrived. When the client receives the notification, it pulls the new message. This way, even if a notification for a particular message is lost, the client can still retrieve the missing message the next time it pulls messages.

Security and Privacy

Compared to the C2C scenario, where end-to-end encryption is relatively easy to implement, the cost of achieving end-to-end encryption in group chats is significantly higher. However, there are feasible solutions, such as those used by iMessage and Signal. Implementing end-to-end encryption in group chats is complex and will not be discussed in this article.

Live Stream Messages

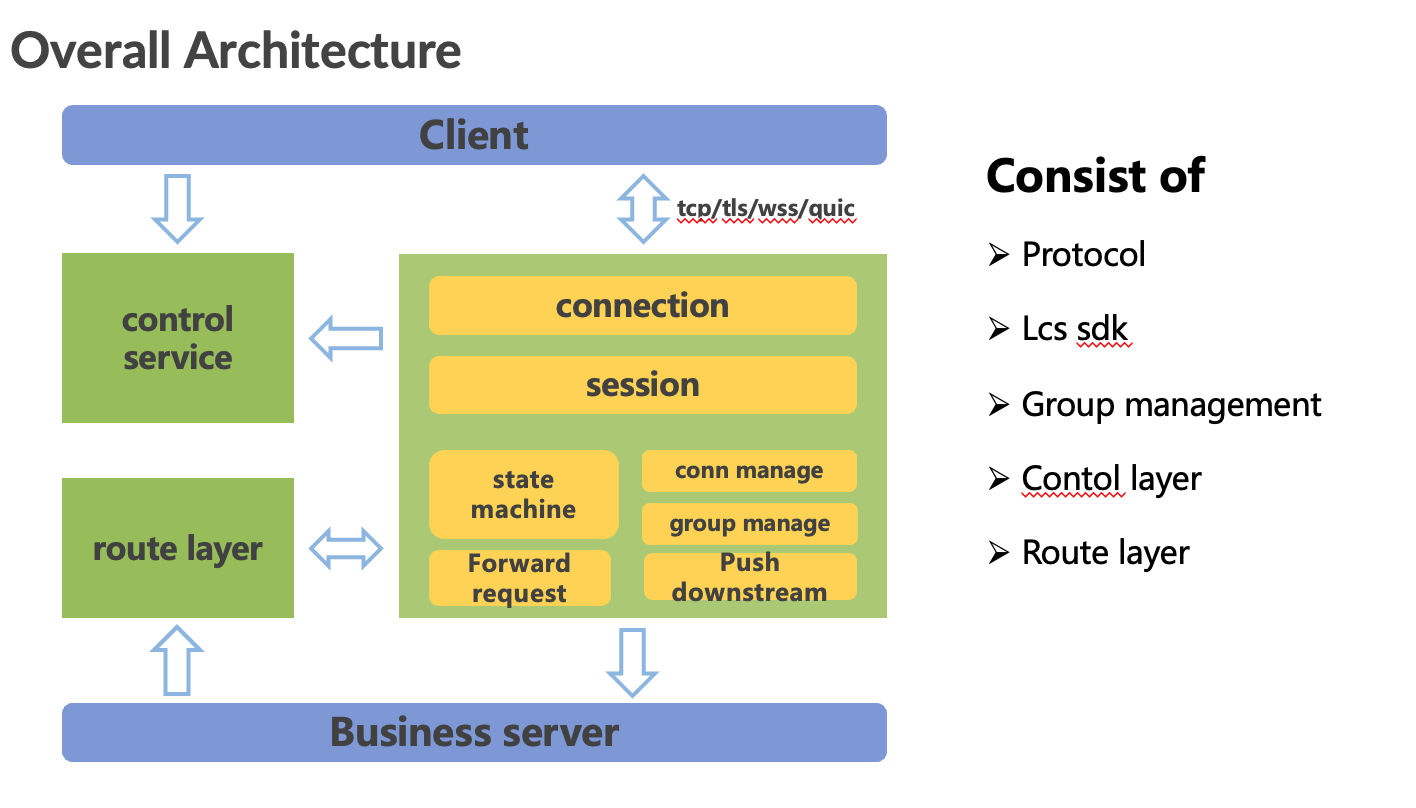

Typical architecture

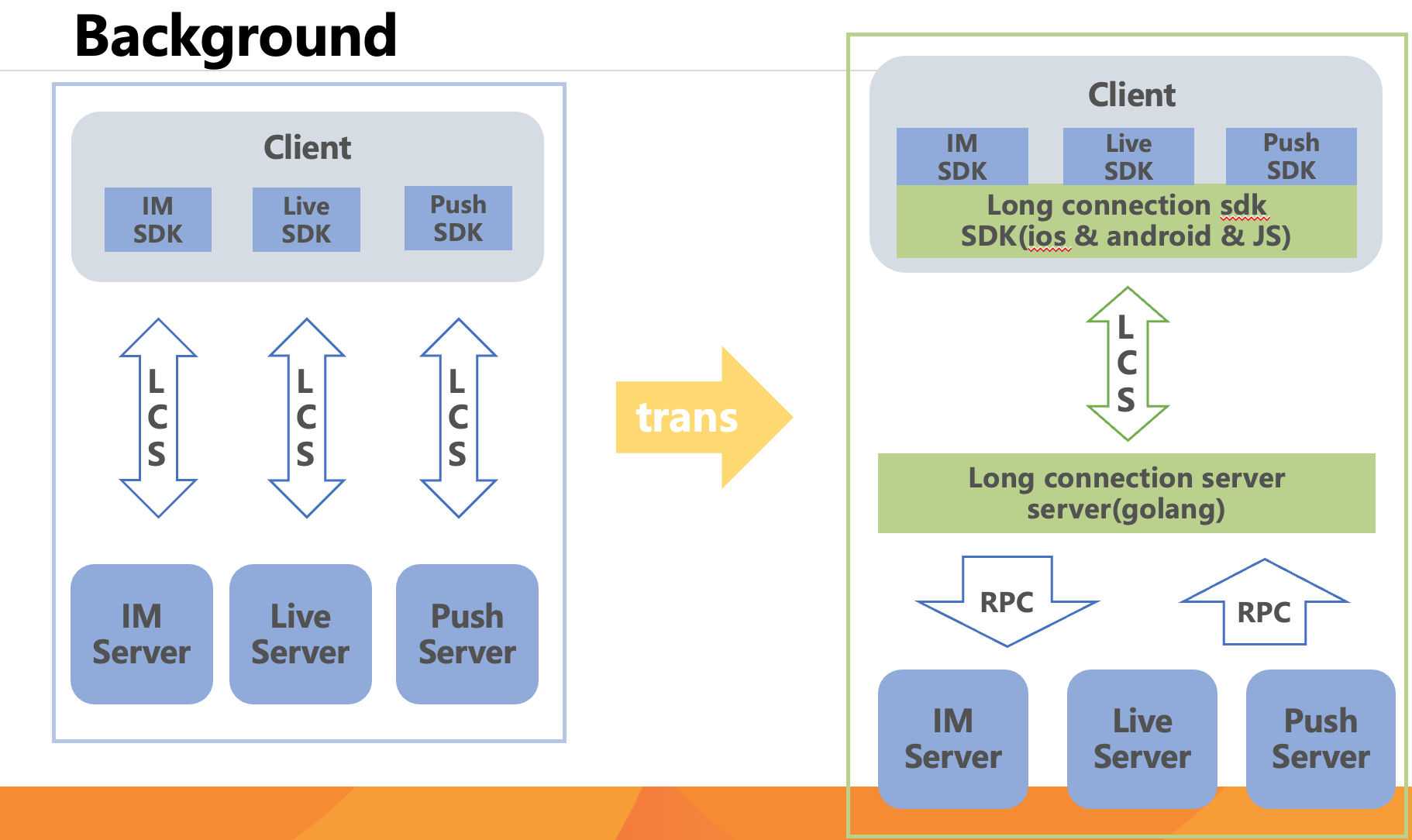

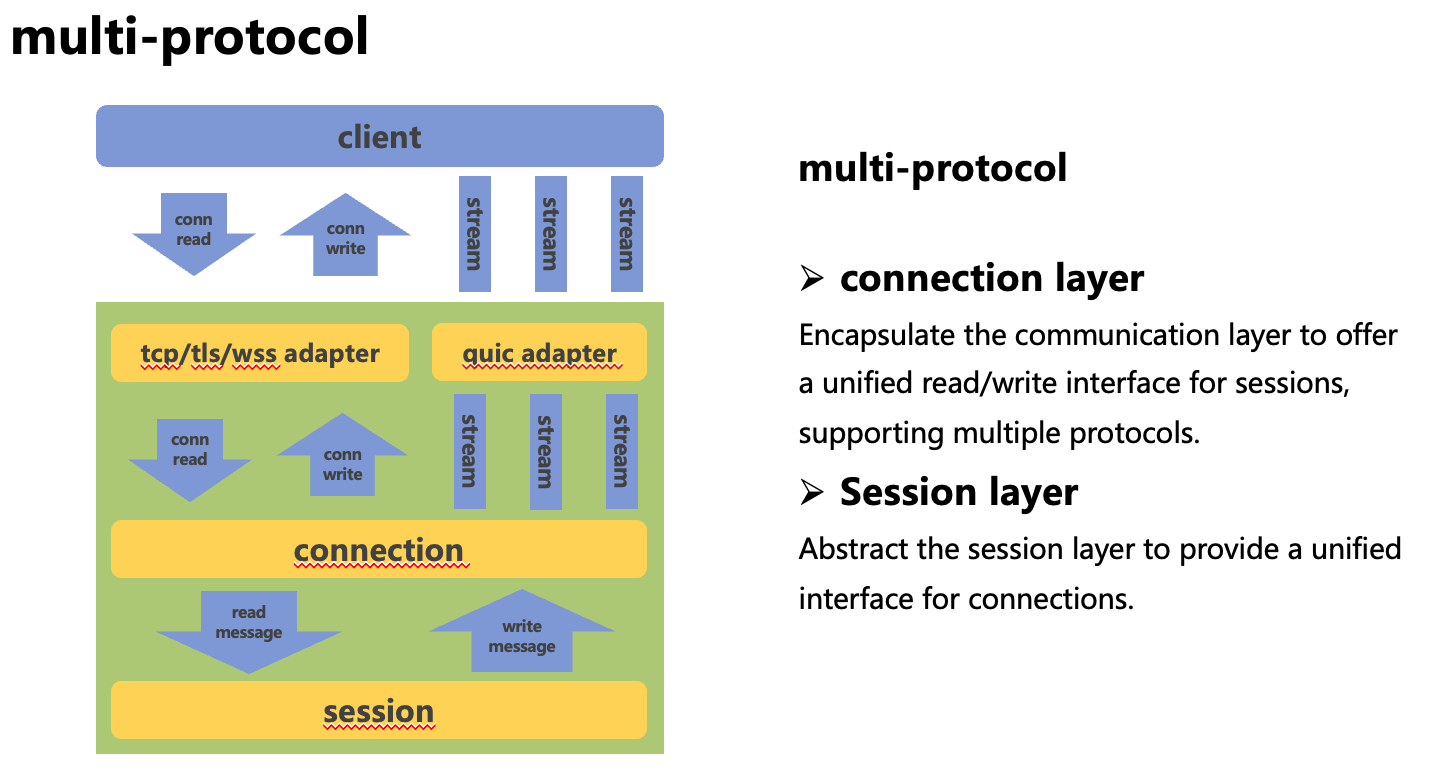

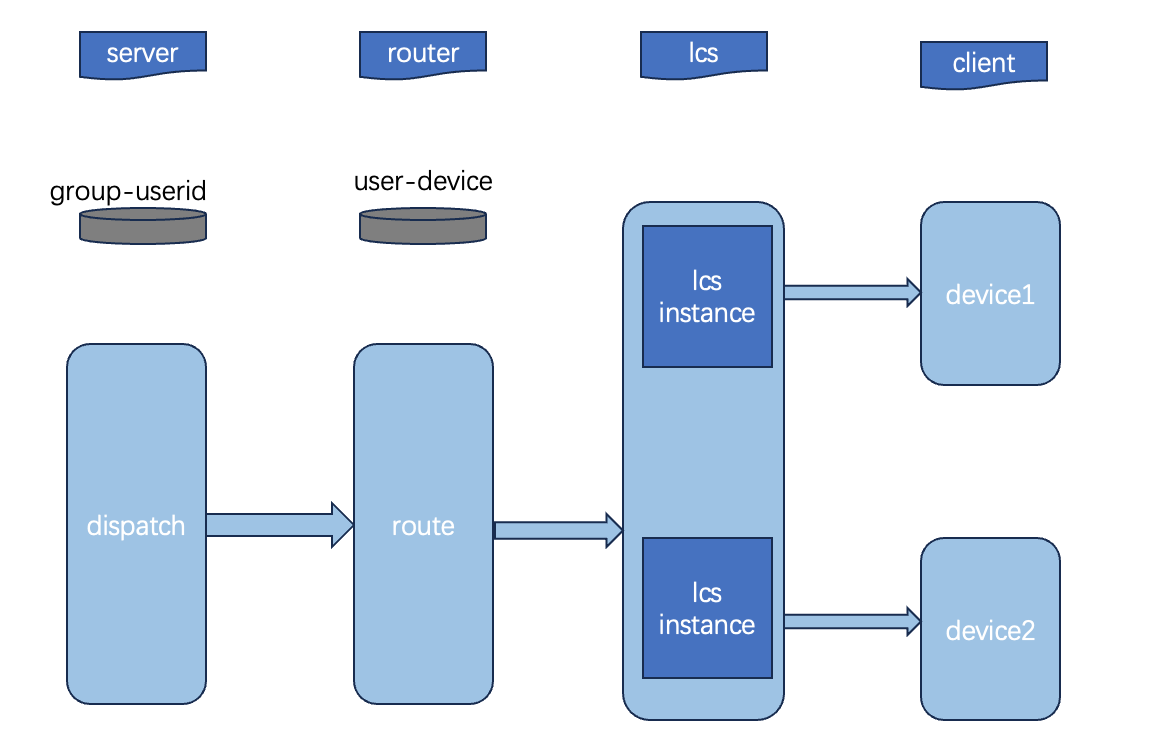

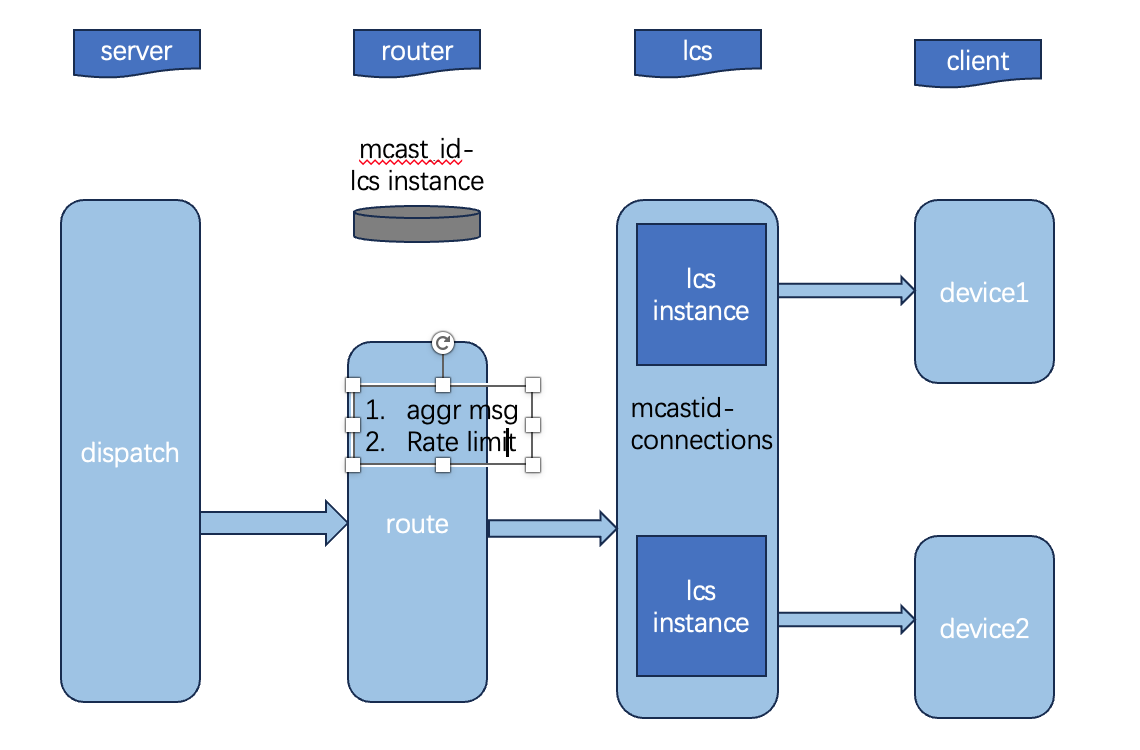

Live stream messages mainly experience two amplification stages: 1M * 1N.

- M depends on the number of LCS (Long Connection Service) instances and the number of viewers in the live stream room.

- N depends on the number of connections joining a particular multicast on a single instance.

M can reach hundreds of instances, and since N is maintained in memory, N can easily reach a scale of 100,000.

Therefore, with 100 instances, each with 100,000 connections joining the same room, it is possible to support 10 million simultaneous connections in a single room.

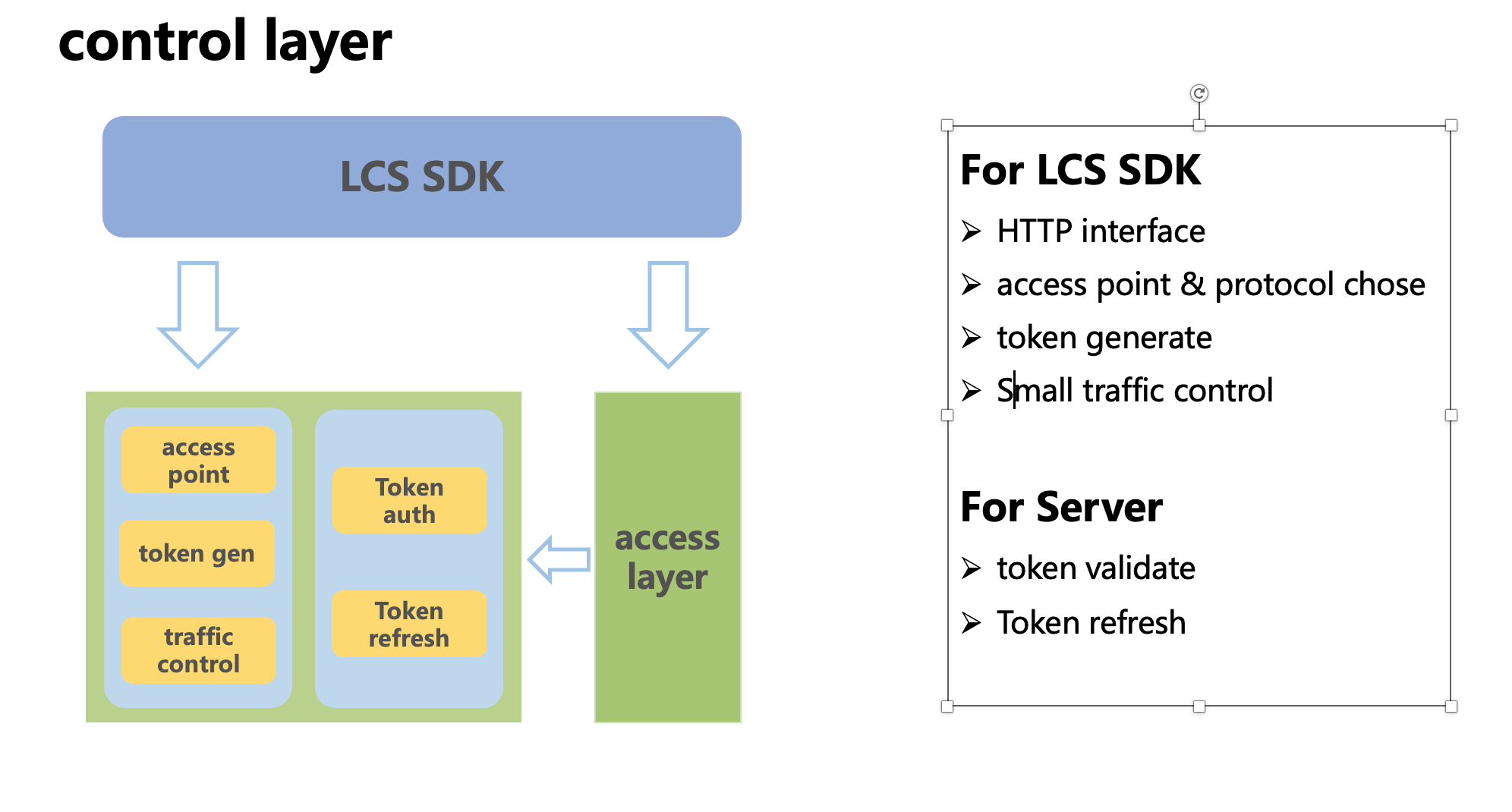

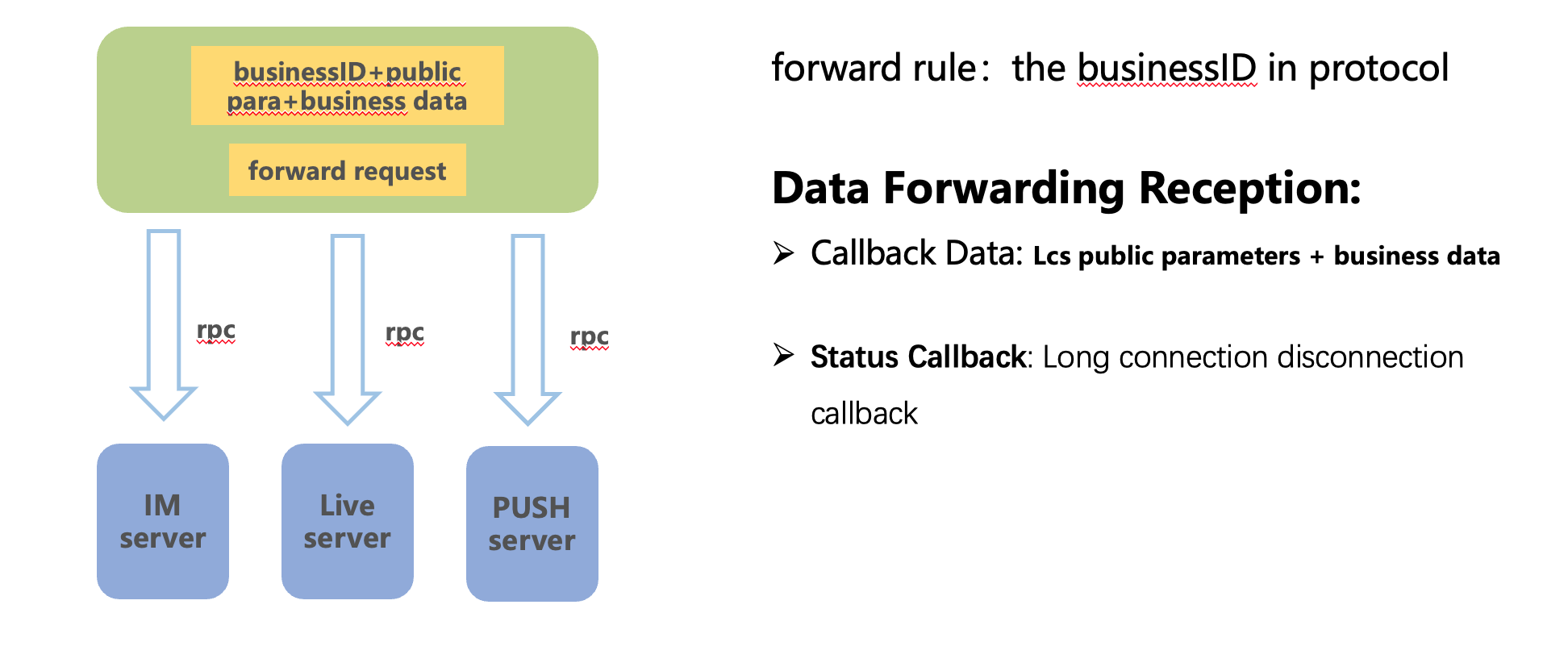

route layer

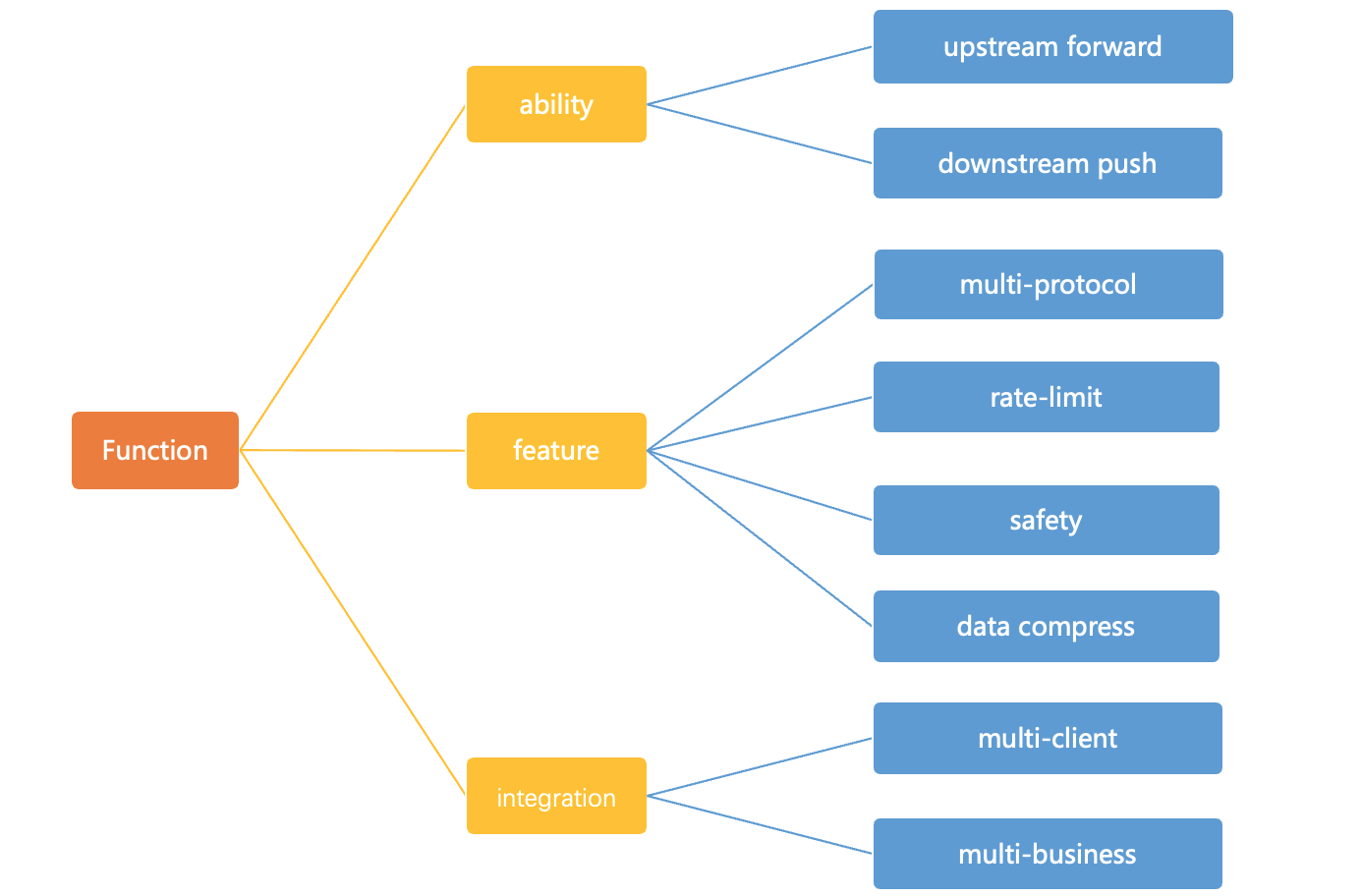

The main functions of the routing layer are:

- To find which long connection instances have connections that joined the room based on the mcastid.

- To aggregate messages so that each message does not need to be notified individually.

- To implement rate limiting protection on the client side. If the processing capacity is exceeded, rate limiting can be applied here.

- To prioritize messages, ensuring that high-priority messages are pushed first and not discarded.

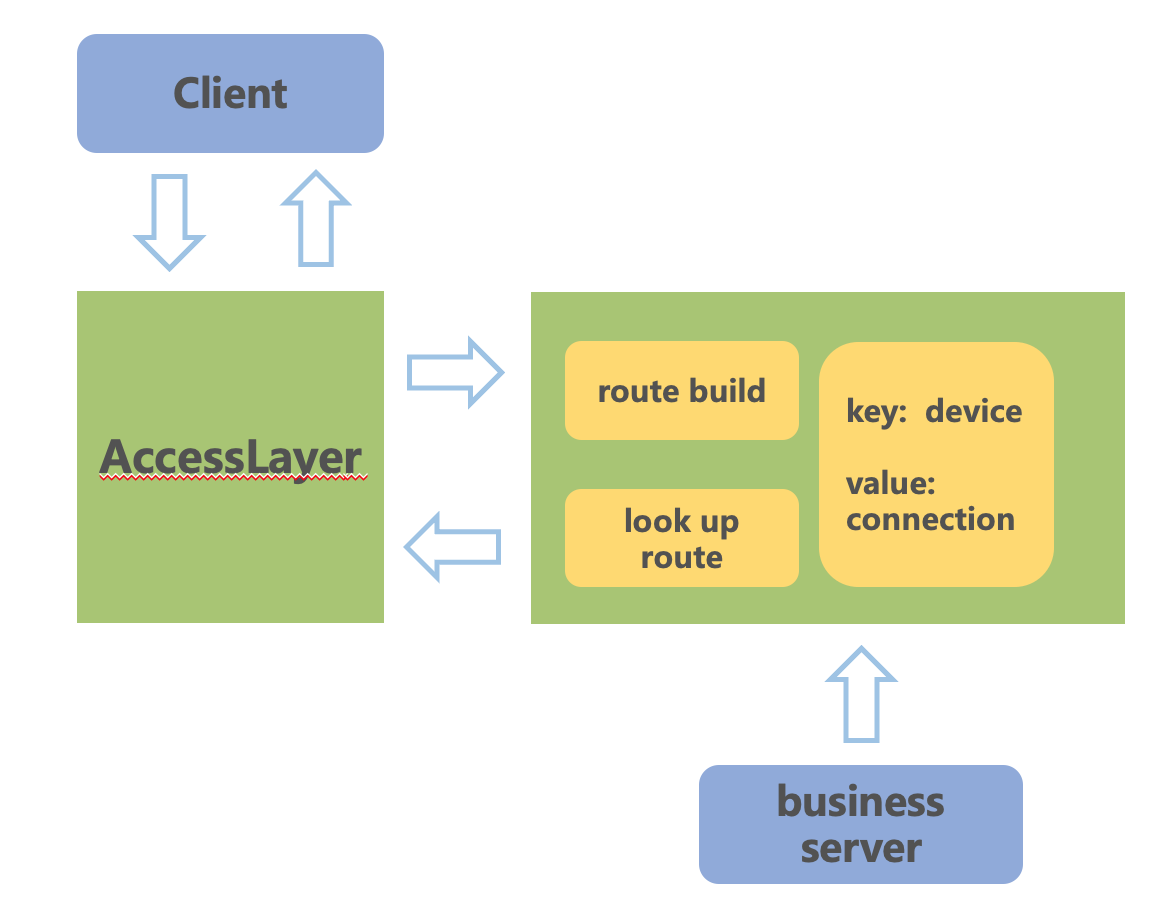

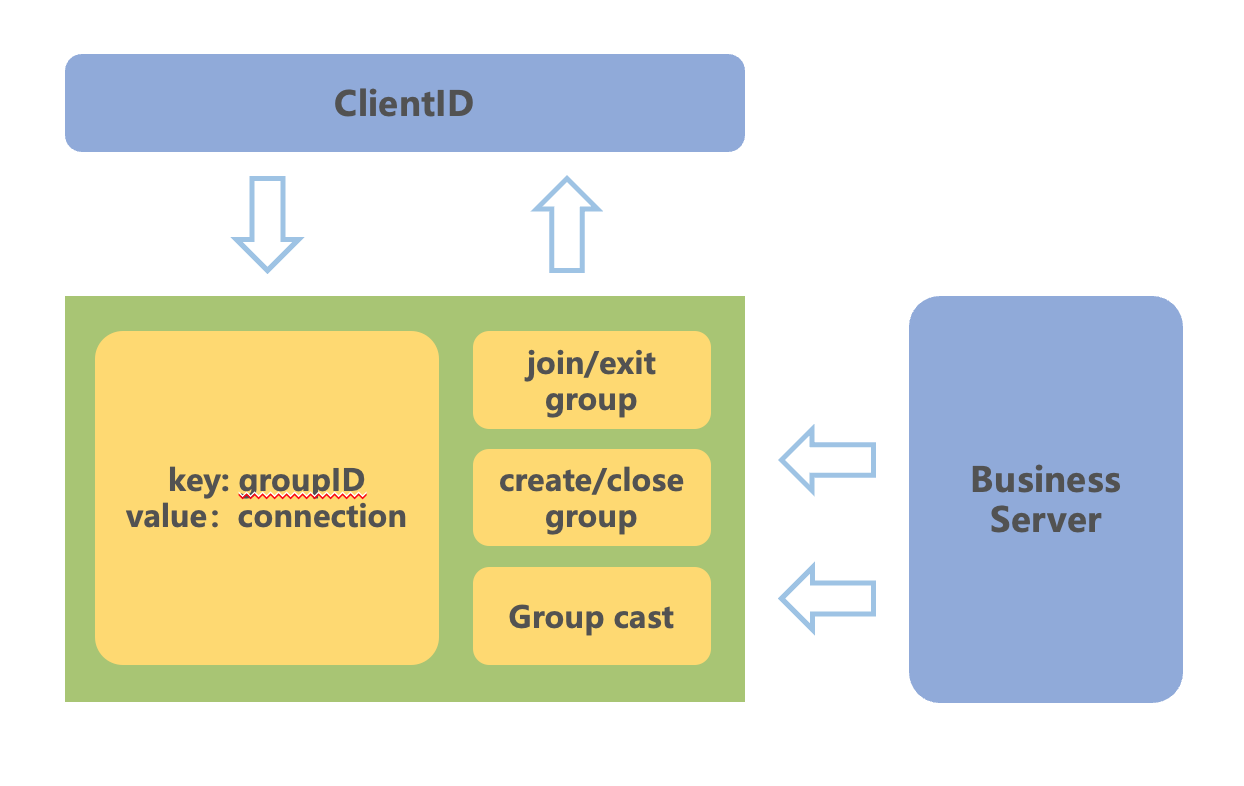

lcs layer

The main functions of the connection layer are:

- To maintain the map of roomID and connections.

- To compress messages, significantly reducing bandwidth usage.

- To send messages to the client.

push mode or pull mode

For group messages, we have to guarantee that messages won’t be lost. Therefore, we use a notify + pull mechanism. However, during live stream messages, we can tolerate occasional message loss to ensure messages are more timely. Thus, we typically use a push mode, directly pushing messages to the client.

gift message

Conclusion

For gift messages and other important messages, which are especially significant for the streamer, we can increase their priority. However, in a push mode, disconnections may still result in message loss. Therefore, we have established a separate data stream for gift messages, using a notify + pull mechanism.

Group chats and live stream messages each serve unique purposes in real-time communication. Group chats are ideal for sustained interactions with features like message history and end-to-end encryption, making them suitable for team collaboration and social groups. Live stream messages, however, excel in large-scale, real-time interactions, perfect for events, gaming, and broadcasts.

Technically, group chats use read and write diffusion models for efficient message storage and retrieval, ensuring reliability and security. In contrast, live stream messages prioritize immediacy and scalability, using a push-based system to handle high user volumes and rapid turnover.

Choosing between the two depends on the communication needs, with group chats providing robust, secure interactions and live stream messages offering dynamic, real-time engagement. Understanding these differences helps in selecting the right tool for optimal performance and user satisfaction.